Blog / Affiliate marketing

Web scraping for affiliate marketing: a guide on downloading and customising a website to suit your needs.

This article is updated regularly

Last update:

13 March 2025

What is web scraping?

Web scraping means downloading websites as copies to a computer. This technology is used to download entire websites and extract specific data of interest from a given portal. The whole process is carried out using bots, an indexing robot, or a script written in Python. During product or service reviews on various platforms. This helps identify patterns related to customer satisfaction levels and areas that need improvement. Meanwhile, market analysis firms can collect data on product and service prices, sales volumes, and consumer trends, aiding in price strategy formulation and marketing action planning.

Thanks to web scraping, analysts can also conduct studies on website user behaviours, analysing aspects like navigation, interactions, and time spent on individual pages. This can assist in optimising the user interface, enhancing user experience, and pinpointing areas that require further refinements.

In medicine and scientific research, web scraping can gather data from scientific publications, clinical trials, or medical websites. This enables the analysis of health trends, the examination of therapy effectiveness, or the identification of discoveries.

In summary, web scraping as a data collection tool for analysis opens doors to a deeper understanding of phenomena, relationships, and trends across various fields. However, it's crucial to remember the ethical and legal aspects of web scraping, exercise caution, and adhere to rules that govern access to both public and private data.

Web scraping in affiliate marketing

How does web scraping relate to affiliate marketing? Let's start with the most significant argument that prompts you to get interested in web scraping, i.e., the saved time you gain by downloading competitors' websites. Everyone knows, or at least guesses, that creating a good landing page can be time-consuming and that success depends, among other things, on time. Other factors are openness to a change of approach, searching for new campaigns, conducting tests and advertising analysis. Success is achieved by those who do not stop at trifles but look for ways to scale. To run one campaign, you need to research the target group, GEO selection, offers, etc., and prepare consumables, including a landing page.

Some people prefer landing pages provided by the affiliate network, others use ready-made templates from page builders, and others still choose to create a landing page from scratch. The first two options are the most common. Sometimes, they can become profitable, but this is a short-term solution as competition is fierce and packages with available templates deplete quickly.

A high-quality landing page is the key to future success and a good return on investment. It is worth adding that only some landing pages from a competitor can bring the expected result. It is better to fine-tune the desired landing page, taking into account the criteria of the future advertising campaign.

Of course, you must remember to do everything legally, i.e., according to certain rules, which you will learn about in a moment.

Is web scraping legal?

Yes. Web scraping is not prohibited; companies you sign do so legally. Unfortunately, there will always be someone who starts using Startsool for piracy activities. Web scraping can be used to pursue unfair pricing and steal copyrighted content. A website owner that is under scraper can suffer substantial financial losses. Interestingly, web scraping was used by several foreign companies to save Instagram and Facebook stories that should be time-limited.

Scraping is fine if you respect the copyright and adhere to set standards. However, if you decide to switch to a darker side not accepted in MyLead, you may face various consequences.

Some good practices when scraping websites

Remember about the GDPR

Regarding EU countries, you must comply with the EU data protection regulation, commonly known as the GDPR. If you aren't scratching personal data, you don't need to worry too much about it. Let us remind you that personal data is any data that can identify a person, for example:

• first and last name,

• email,

• phone number,

• address,

• username (e.g. login/nickname),

• IP address,

• information about the credit or debit card number,

• medical or biometric data.

To web scrape, you need a reason for storing personal data. Examples of such reasons include:

1. Legitimate interest

It must be proved that data processing is necessary for the legitimate business. However, this does not apply to situations where these interests are overridden by the interests or fundamental rights and freedoms of the person whose data you want to process.

2. Customer consent

Each person whose data you want to collect must consent to the collection, storage and use of their data as you intend to, e.g., for marketing purposes.

If you do not have a legitimate interest or customer consent, you are violating GDPR, which may result in a fine, a restriction of freedom, or imprisonment for up to two years.

Attention!

GDPR applies only to residents of European Union countries, so it does not apply to countries such as the United States, Japan or Afghanistan.

Comply with copyright

Copyright is the exclusive right to any work done, for example an article, photo, video, piece of music, etc. You can guess that copyright is very important in web scraping, because a lot of data on the internet is copyrighted. Of course, there are exceptions in which you can scrape and use data without violating copyright laws, and these are:

• usage for personal public use,

• usage for didactic purposes or scientific activity,

• usage under the right to quote.

Web scraping - where to start?

1. URL

The first step is to find the URL of the page you are interested in. Then, specify the topic you want to choose. Your imagination and data sources are your only limitations.

2. HTML code

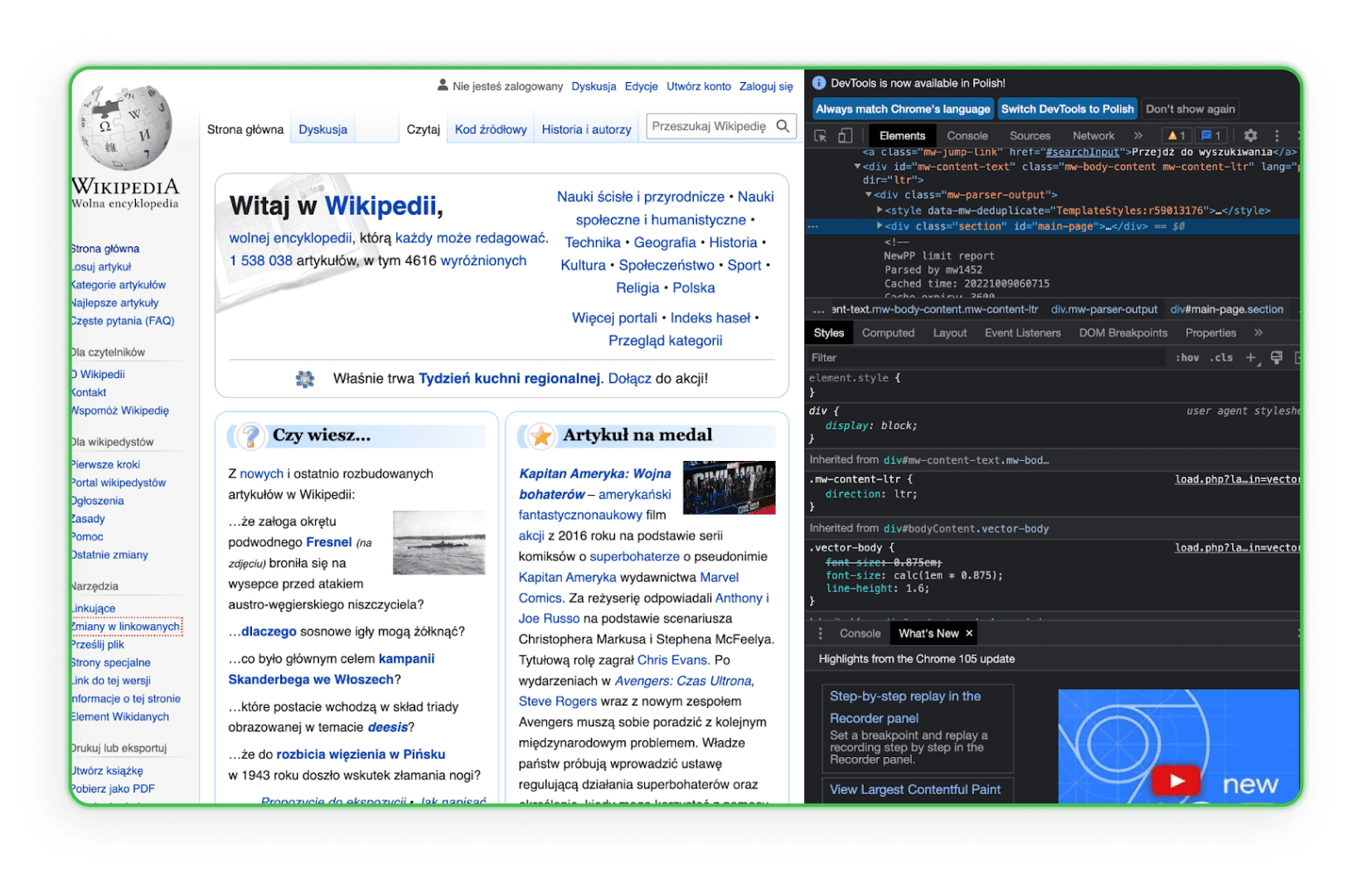

Learn the structure of the HTML code. You need to know HTML to find an item you download from your competitors' websites. The best way is to go to the element in the browser and use the Inspect option. Then, you will see the HTML tags and be able to identify the component of interest. Here's an example of this on Wikipedia:

As you can see, when you hover the mouse over a given line of code, the element corresponding to this line of code is highlighted on the page.

3. Work environment

Your work environment should be ready. You'll find out later that you'll need text editors like Visual Studio Code, Notepad ++ (Windows), TextEdit (MacOS), or Sublime Text, so get one now.

Libraries for web scraping - how to save a web page?

Web scraping libraries are organised collections of scripts and functions written in specific programming languages that assist in automatically retrieving data from websites. They allow developers to quickly analyse, filter, and extract content from web pages' HTML or XML code. With them, instead of writing every function manually, developers can use ready-made, optimised solutions for searching, navigating, and manipulating the structure of websites.

Simple HTML DOM Parser - A PHP Library

This tool is for PHP developers and facilitates manipulation and interaction with HTML code. It allows for searching, modifying, or extracting specific sections of the HTML code easily and intuitively.

Beautiful Soup - A Python Library

Beautiful Soup is a Python library designed to parse HTML and XML documents. It has been crafted to easily navigate, search, and modify the DOM tree while providing intuitive interfaces for extracting data from web pages.

Scrapy - A Python Library

Scrapy is a powerful library and framework for web scraping in Python. It enables the creation of specialised bots that can scan pages, follow links, extract the necessary information, and save it in the desired formats. Scrapy is perfect for more complex applications requiring deep web page searching or interaction with forms and other page elements.

Saving the page by the browser

Anyone, including you, can save the selected page on their computer by entering any browser. This process takes just a few minutes and produces a duplicate page as an HTML file and folder on the user's computer. The entire page copy opens in the browser and looks quite smooth. However, to save a really large page, this process will have to be repeated many times.

Many companies and freelancers on the Internet will do everything for you for a fee. One of the website copying services is ProWebScraper. A trial version is available, which you can download 100 pages from. Later, of course, you will have to pay. The plans start from $40 monthly, depending on how many pages you want to scrape. You can always find another site with a free trial period. It is worth mentioning that some portals allow you to check whether a given page is copyable because many sites protect themselves from this.

More user-friendly tools for beginners

Not everyone wanting to delve into web scraping is an experienced developer. For those seeking less technical, more intuitive solutions, there are tools specifically designed for ease of use. With visual interfaces and simple mechanisms, the following software allows for efficient data collection from websites without the need for coding.

ZennoPoster

ZennoPoster is an automation and web scraping tool that caters more to those who aren't necessarily programming experts. Its user-friendly visual interface allows for the creation of scraping scripts and other automated browser tasks.

Price: The tool is priced at $37 per month, but it has a 14-day trial period.

Browser Automation Studio

BAS is another user-friendly browser automation and web scraping program. It comes with built-in script creation tools that allow for data extraction, webpage navigation, and many other functions without any programming knowledge.

Price: The tool is free.

Octoparse

Octoparse is a web scraping application that effortlessly collects vast amounts of data from websites. Its visual interface allows users to specify what data they want to collect, and Octoparse handles the rest.

Price: While one version of this tool is free, it has certain restrictions. In the free version, users can store ten tasks in their accounts. All tasks can only be run on local devices using the user's IP. Data export in the free plan is limited to 10,000 rows per export, even though the tool allows for unlimited web page scans in a single run. It can also be used on any number of devices. However, technical support in this version is limited. Paid versions start at $75 per month.

import.io

import.io is a cloud-based web scraping tool that facilitates creating and running scripts to extract data from websites. It also offers features that automatically structure the collected data and convert it into useful formats like Excel or JSON.

Price: The tool offers a free demo, but the pricing for paid packages starts at $399 per month.

Online web scraping services

Online web scraping works like parsers (component analyzers), but their main advantage is the ability to work online without downloading and installing the program on your computer. The principle of operation of websites offering web scraping online is quite simple. We enter the URL of the page we are interested in, set the necessary settings (you can copy the mobile version of the page and rename all files, the program saves HTML, CSS, JavaScript, fonts) and download the archive. With this service, the webmaster can save any landing page, and then enter their own format and necessary corrections.

Save a Web 2 ZIP

Save a Web 2 ZIP is the most popular website for web scraping via a browser service. Its simple and thoughtful design attracts and inspires confidence, and everything is completely free. All you need to do is provide the link to the page you want to copy, choose the options you want, and it's ready.

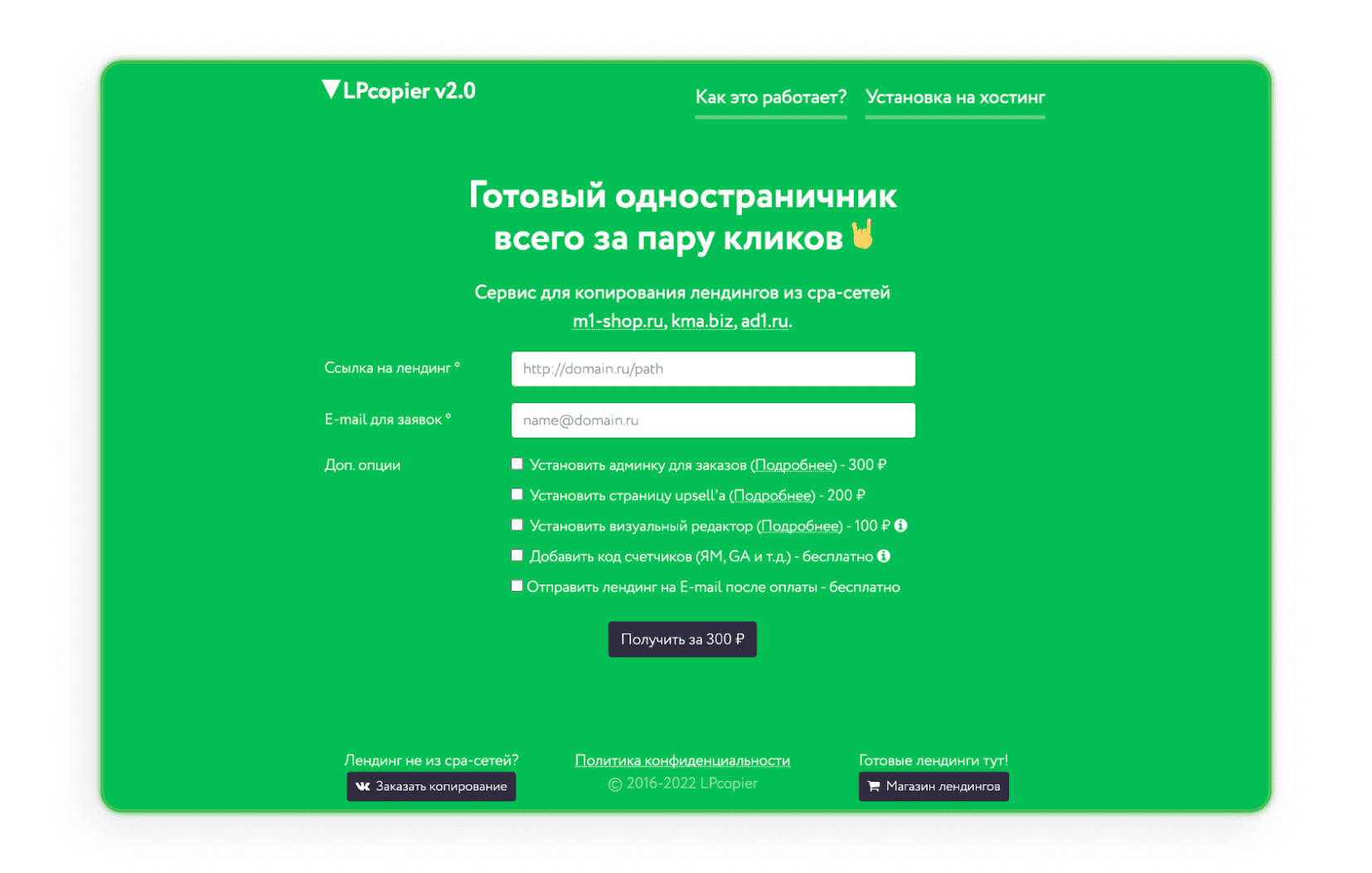

LPcopier

LPcopier is a Russian service that targets affiliate marketing. The portal allows scraping for about $5 per page. Additional services, such as installing analytical meters, are considered separately in terms of cost. It is also possible to order a landing page outside the CPA network or an already-ready landing page. If Russian scares you, just use the translation option that Google offers.

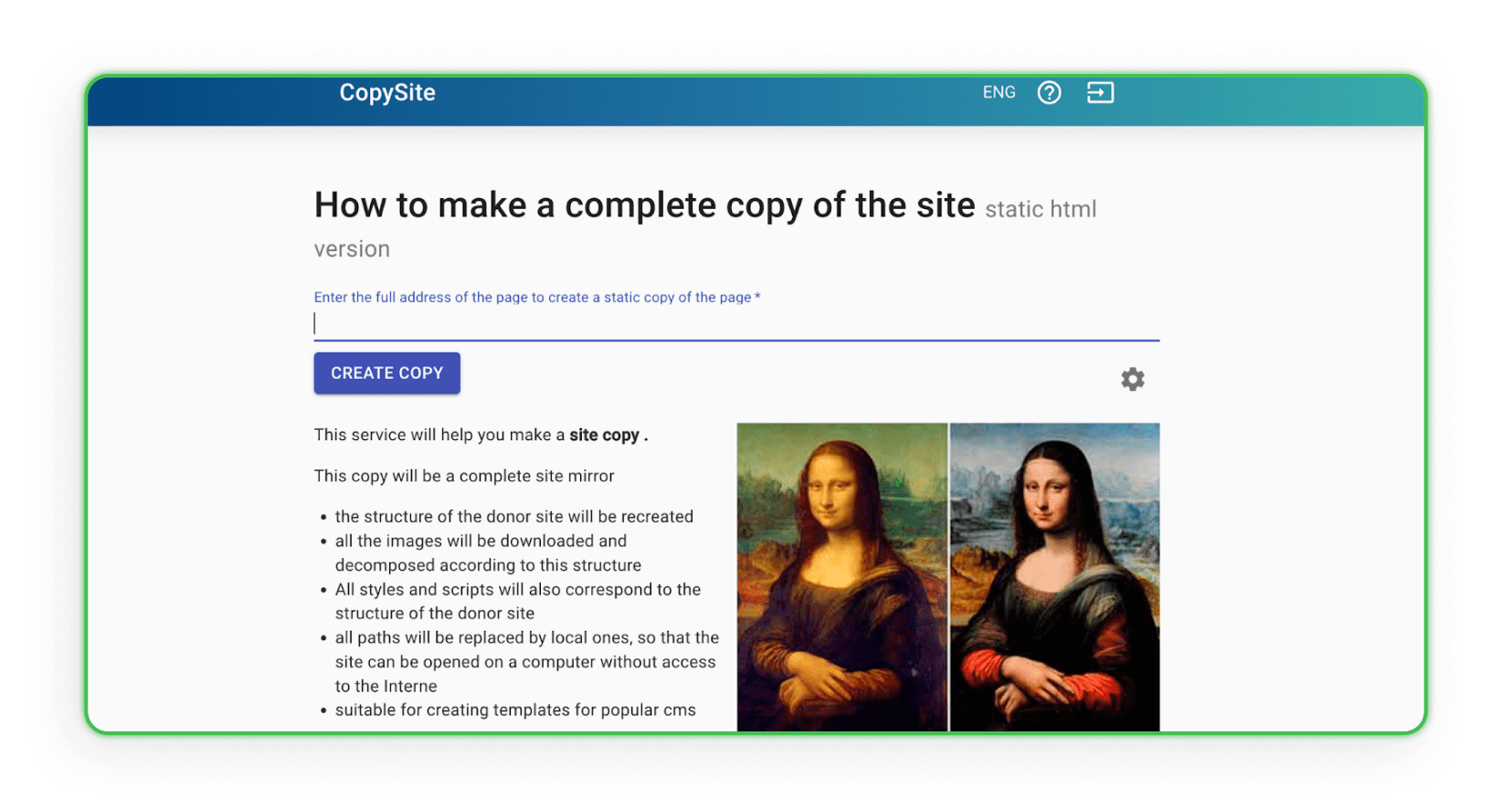

Xdan

The Xdan website is also a Russian website (available in English) offering CopySite, i.e., web scraping services. This website allows you to create a local copy of a landing page for free and choose to clean HTML counters or replace links or domains.

Copysta

The Russian Copyst service is one of the fastest services of this type offered. They declare that they will contact you within 15 minutes. The web scraping is done via a link, and you can update the website for an additional fee.

I downloaded the website. What's next?

Have you already downloaded a website? Great, now you'd have to think about what you want to do with it. You certainly want to modify it a bit. How?

How do I redesign the copied page?

To redesign the copied page for your needs, you need to duplicate the asset however you like. To make changes to the structure, you can use any editor that allows you to work with the code, such as Visual Studio Code, Notepad ++ (Windows), TextEdit (MacOS), or Sublime Text. Open an editor that is convenient for you, customise the code, then save it and see how the changes are displayed in the browser. Edit the visual appearance of HTML tags using CSS, add web forms, action buttons, links, etc. After saving, the modified file will remain on the computer with updated functions, layout and targeted actions.

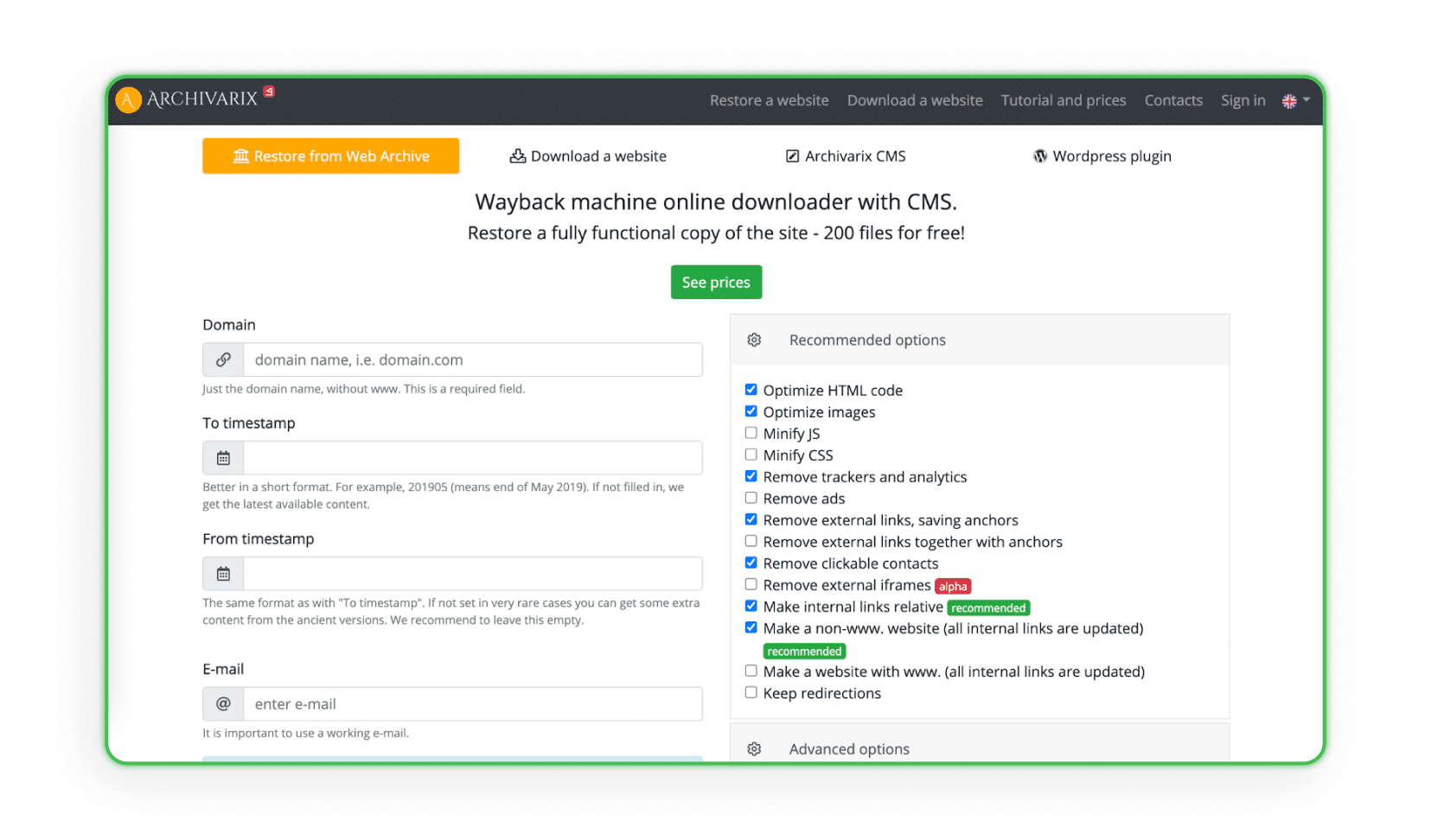

Some websites collect and analyse all design data from specific web archives that have a website creation and management system (CMS). The system duplicates the project with the admin and disk space. Archivarix is an example of such a website (the program can restore and archive the project).

Uploading websites to hosting

The last and most important step in web scraping of landing pages is uploading them to your hosting. Remember that coping and making small visual changes is not enough. Other people's affiliate links, scripts, replacement pixels, JS Metrica codes, and other counters almost always remain in the page's code. They must be removed manually (or with paid programs) before uploading to your hosting. If you want to know exactly how to upload your website to hosting, check out our article: “How to create a landing page? Creating a website step by step”.

How to defend against web scraping?

Protection against web scraping is essential for maintaining the privacy and security of your website and its data. You can apply several effective methods to minimise the risk of web scraping attacks.

• Robots.txt - Using the robots.txt file is a standard way to communicate with search robots. You can specify which parts of your site should be searched and which should not. Although honest bots usually follow these guidelines, it's worth noting that this file doesn't guarantee protection against all scraping bots.

• .htaccess - Through the .htaccess file, you can block access for specific User Agents that bots may use. It's one way to prevent unwanted bots from accessing your website./li>

• CSRF (Cross-Site Request Forgery) - The CSRF mechanism can secure forms and interactions with your site against automatic scraping. This might involve using CSRF tokens in forms./li>

• IP Address Filtering - You can limit access to your website only to specific IP addresses, which can help minimize web scraping attacks.

• CAPTCHA - Adding CAPTCHA to forms and interactions can make it difficult for bots to interact with your site automatically. It's one of the most popular defences against automatic scraping./li>

• Rate Limiting with mod_qos on Apache servers - Setting limits for the number of requests from a single IP address within a specified time can limit the possibility of automatically downloading large amounts of data in a short time./li>

• Scrapshield - The Scrapshield service offered by CloudFlare is an advanced tool for detecting and blocking web scraping actions, which can assist in protecting your site./li>

If you've ever noticed that your landing page has fallen victim to web scraping techniques, there is a way to redirect some of the traffic back to your page.

On the Afflift forum, you will find a simple JavaScript code. Place it on your page, and it will protect you from the complete loss of traffic in case of web scraping.

The code can be found in THIS THREAD.

Good to see you here!

We hope you already know what web scraping is, how to download a web page, and, most importantly, how to comply with copyright laws. Now it's your turn to make your move and start earning. However, if you have questions about affiliate marketing or need to know which program to choose, please contact us.

Have any questions? Feel free to reach us through our channels.